Colours of the same lightness

And other o1-mini stories

Recently I got scared at my own reaction to an answer from ChatGPT. It was the first time this had happened, but while my fear was genuine, I must tamp down any reader expectations: I don’t think my telling of this particular incident will be scary for you. What happened was that I used an answer from ChatGPT without closely checking it. Millions of students writing assignments do this already, but I had never done so, and it feels to me like a significant milestone in humanity’s relations with generative AI. The latest model from OpenAI is of such high quality that I succumbed to laziness and just copied the numbers it gave me with only a cursory check of the results.

A problem with writing about large language models is that different people use them different amounts, and for different purposes. Some people don’t even pay for it and see lower-quality output. The average person probably only knows about one or two of the GPT generations. So as some relevant background, I used to be a maths and physics student, and these days I ask ChatGPT a lot of coding questions (where it is very strong) and occasionally ask it maths questions. The history of the different ChatGPT models doing maths, as judged by me, goes something like this:

3.5 (the first one): totally useless. If I gave it a simple first-year exercise to do, it would know the words that were needed in the solution, but it couldn’t keep track of the numbers for something as simple as a quadratic equation.

4: still useless. Once though, notably, it recognised when it had reached a nonsensical conclusion – it found that an arbitrary angle in some trig problem was always 45 degrees – and went back to the start and tried again (then it made a different error, confusing an arcsin and an arctan).

4o: occasionally useful. I’d have some integral to do, not technically difficult but quite tedious, and in my page of working I’d make a sign error or two, and my final result would be clearly wrong. ChatGPT-4o wouldn’t get the right answer either, but it might chain enough correct equalities together to allow me to do a line-by-line comparison and figure out where I had first made a mistake.

o1-mini: legit good.1

So let’s talk about o1-mini doing maths.2 It’s not perfect by any means, but neither am I.

(I haven’t tried the paid version of Claude from Anthropic, but my impression is that the OpenAI models remain a step above Claude in maths, even as Claude might be stronger for code.)

Colours

I wanted to make a new colour theme for something at work. Let’s say that I had a reference colour of rgb(176, 118, 167), and I wanted a colour of the same brightness that was on a spectrum between black and yellow. I’ve played with colour spaces a little bit in the distant past, so I knew some relevant vocabulary, but the details are hardly second-nature to me.

A simple approach of defining the brightness by the average of the red, green, and blue channels fails, because the human eye is more sensitive to green light and less sensitive to blue light. People have done experiments on human perception of colour, and the results are things like CIEXYZ and CIELAB. The CIELAB space was the one of interest to me, because the L, often written as a variable as L*, stands for ‘lightness’.

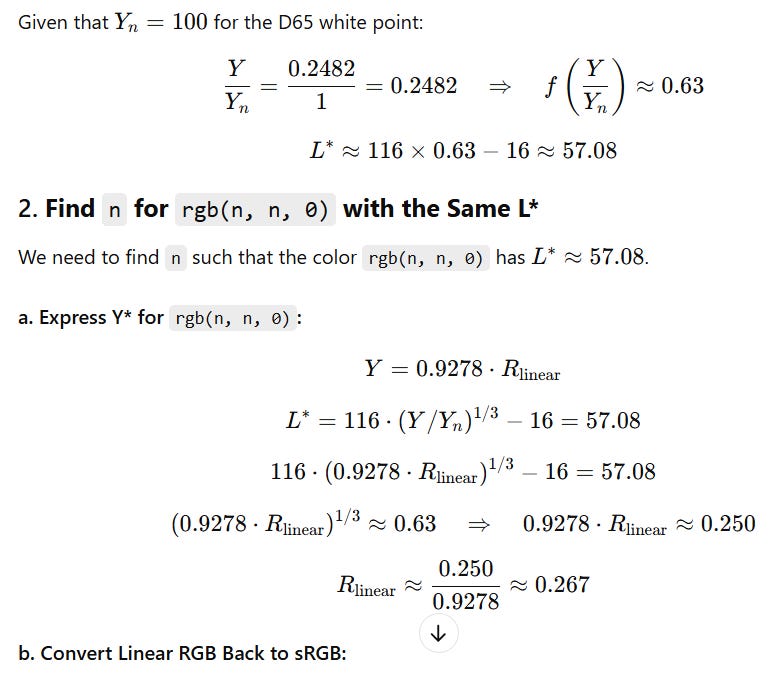

The basic approach then is to find the L* value of my reference colour defined by RGB, using whatever the accepted approximation formulas are, then derive an expression for the L* of rgb(n, n, 0), set this expression equal to the reference L*, and solve for n.

I knew enough that this would be a tedious process: click on that CIELAB Wikipedia article and note that there is no conversion formula given for RGB to L*a*b*; the CIEXYZ article does have some conversions, but there are subtleties to do with gamma correction that it omits, and honestly I don’t want to study the sRGB article as well and figure out whether I can ignore the gamma because it’s already handled by our screens, or if it’s necessary because the XYZ and LAB colour spaces were defined prior to the sRGB standardisation.

It’s a very boring problem to solve, so I asked o1-mini:

I have a color rgb(176, 118, 167). I would like a color of the form rgb(n, n, 0) with the same luminance as defined by CIELAB. What should n be?

I will screenshot the reply, to save myself the boredom of copying in all the LaTeX.

I didn’t want to check all of that. I just made a colour rgb(141, 141, 0) like it said and eyeballed it:

Good enough. Maybe if I were shown these colours without context, I’d pick the new colour as a touch too dark? I’m not sure. Now that I’m writing this post, I feel the need to do at least some checking beyond looking at the colours. The first Google result for an RGB to LAB converter gives an L* of 57.05 for rgb(176, 118, 167), and 56.79 for rgb(141, 141, 0). A little closer to the target is rgb(142, 142, 0) at 57.16, so the worst I can say about o1-mini’s solution is that it might have accumulated enough round-off error that it finished up wrong by less than 1 part in 256.

It might even be the case that o1-mini and the other website are using different, both plausibly valid, conversion schemes. I don’t know, and I don’t care enough to figure it out. I took the o1-mini answer and ran with it.

And that scared me. Is this the future? Was this a good horror story? Am I going to slip into the habit of blindly trusting ChatGPT when it does maths for me, just as I have spent my life trusting a calculator to do arithmetic, or half my life trusting Mathematica/Wolfram? In most cases, I think the answer is ‘no’: the ultimate goal in all the problems I encounter at work is some sort of implementation in software, and I will be able to see if the computer is generating results consistent with what it’s supposed to be doing. But for that one unimportant colour theme task, I took a shortcut that I had never taken before, and I won’t be shocked if I take that shortcut more often in future.

Bivariate normal random variables

This anecdote takes place earlier in time.

Sometimes at work I need to manipulate bivariate normal random variables. One time, for some intermediate calculation, I needed a formula for the probability that one of the two variables was more than delta greater than the other, given that they had a correlation of rho. My caveman brain immediately went to the bivariate standard normal probability density function, and I started outlining the integral in my head,

It might work? Complete the square on y, and you’ll probably get some sort of error function after integrating from minus infinity to x - delta, then see if Wolfram Alpha gives an integral for exp(-x^2) times whatever the error function term is. If that fails, go to some 1960’s-era table of integrals like Abramowitz and Stegun.

But this was soon after the o1 announcement, I’d heard that its maths skills were a step up from the earlier models, and I was not looking forward to all the error function manipulation. So I asked ChatGPT,3

Let X and Y be bivariate normal random variables with correlation rho. For a constant delta, is there a formula for the probability that X > Y + delta?

In its answer, ChatGPT first defined Z = X - Y, and then argued that since X and Y were bivariate normal, any linear combination was also normal, and therefore Z was normal. It is straightforward to find the mean and variance of Z, and then you just plug in to the standard normal CDF.

I had anticipated half an hour of tedious integrals and sign errors. Not only had ChatGPT solved my problem in seconds, but it had done so in a much simpler and more elegant way, demonstrating more sophisticated understanding of the material than I had. It was the first time that ChatGPT had outperformed me in a maths problem, and I was deeply, deeply astonished. I exaggerate only slightly when I say that I spent the next half-hour in an overwhelmed daze; even if it’s not literally true, it feels like a faithful representation of my reaction.

I didn’t do any stats at uni, so my fundamentals aren’t as strong as they might be – I didn’t consciously know about this linear combination property of bivariate normal random variables (although I must have implicitly taken advantage of it many times). But it’s true, and it’s even in the intro to the Wikipedia article for the multivariate normal distribution.

Perhaps the question I asked is in its training data; it could be a second-year assignment problem somewhere. If I’d had this question a few months earlier, I might have tried asking an older model in the GPT-4 class, and probably I would have received the same right answer. The example doesn’t necessarily function as a symbol for the greater mathematical abilities of o1. But it was the first time that I had been bested by a language model in a real-world maths problem, so it has symbolic importance for me.

At work we have a Teams channel for people to share case studies and articles about AI, and I posted an abbreviated version of this story there. An interesting addendum to it is that a colleague queried the AI’s solution, asking if I had verified it numerically; he had tried Googling and had found a very different formula, and he felt that the final ChatGPT formula didn’t behave correctly. It was my human colleague who was mistaken here, and if he had been a “human in the loop”, verifying the AI output before it gets put to use, then the process in this example would have been much slower than blindly trusting the AI.

I am certainly not criticising my colleague for his scepticism and desire to verify the ChatGPT-produced formula – it’s not always going to be right, and hopefully he ended up learning something from ChatGPT just as I had done. But there is a potentially dangerous dynamic here, as people who trust the AI without checking will produce things faster than those who verify, and the AI is so good now that it will often be correct.

Sometimes I am still better than o1-mini

One time I had another pair of standard bivariate normal random variables with correlation rho, and this time I wanted the probability that both were greater than some constant t. It turns out there’s no closed-form solution to this, and o1-mini recommends a formula involving both the standard normal univariate CDF and the bivariate CDF,

The latter function is accessible via scipy.stats.multivariate_normal. But, knowing that I need to go to this multivariate_normal function, the suggested formula may as well be simplified to

and it’s perhaps surprising that o1-mini needs some help to get there.

Perhaps the most interesting conversation I’ve had with o1-mini is on a subject that I must redact, because it is not generic undergrad stats but instead concerns a very business-specific software problem. I haven’t even implemented it yet, so I can’t say something like, “I got a 5x improvement in speed on this problem thanks to o1-mini.” It may not be a convincing anecdote. But it is interesting to me.

In generalities, I felt that an algorithm we were using was not optimal. I described the key features of the dataset and parameters used in the calculation, and asked what a data structure could be used to allow an efficient algorithm to solve the problem we had.

o1-mini suggested a particular algorithm in association with my choice of several data structures. I didn’t understand how the core of its algorithm could work at all, and I had several back-and-forths in which I asked clarifying questions. While I think a human might have done a better job at isolating and correcting my key misunderstanding, o1’s idea was valid and did click into place soon enough, and I was persuaded that, if the associated data structure worked, then this algorithm should be more efficient than our current implementation.

The conversation then turned to the choice of associated structure. I’d had one particular tree type in mind before I’d asked the question, but it turned out to be inappropriate because it was relatively slow to update as new data was entered or old data was removed.

o1-mini gave me some other possible data structures; one of them looked very promising and I asked for more detail. It wrote an outline of an implementation in python, making some elementary but not critical mistakes that will be easy enough for me to correct whenever I try to put it all together.

What I want to convey is that it felt like a sophisticated conversation, and the experience was mostly very pleasant. I don’t have humans nearby who can opine so readily on specific topics of my choosing in graduate-level computer science. My only complaint is that after each clarification or new idea, o1-mini would re-write the entirety of the algorithm and explanation, so that the chat history has a huge amount of redundancy in it. But on most topics it gets it right in one shot, so I’m not complaining too much.

Intelligence

A lot of people don’t think that AI, so-called, is intelligent. Scott Alexander writes a good summary of state of the discourse that I see:

Maybe if an AI can write a publishable scientific paper all on its own? But Sakana can write crappy not-quite-publishable papers. And surely in a few years it will get a little better, and one of its products will sneak over a real journal’s publication threshold, and nobody will be convinced of anything. If an AI can invent a new technology? Someone will train AI on past technologies, have it generate a million new ideas, have some kind of filter that selects them, and produce a slightly better jet engine, and everyone will say this is meaningless. If the same AI can do poetry and chess and math and music at the same time? I think this might have already happened, I can’t even keep track.

And he proposes and endorses the following three options:

So what? Here are some possibilities:

First, maybe we’ve learned that it’s unexpectedly easy to mimic intelligence without having it. This seems closest to ELIZA, which was obviously a cheap trick.

Second, maybe we’ve learned that our ego is so fragile that we’ll always refuse to accord intelligence to mere machines.

Third, maybe we’ve learned that “intelligence” is a meaningless concept, always enacted on levels that don’t themselves seem intelligent. Once we pull away the veil and learn what’s going on, it always looks like search, statistics, or pattern matching. The only difference is between intelligences we understand deeply (which seem boring) and intelligences we don’t understand enough to grasp the tricks (which seem like magical Actual Intelligence).

Commenter Byron crystallises my feelings, which are a modus ponens to the modus tollens of Scott’s third option (lightly copy-edited by me):

Why isn't there a fourth option: maybe these models are actually intelligent (or close to it) in a meaningful sense?

Some years ago I’d thought about what would convince me that an AI was intelligent, and I decided that doing research-level maths that was interesting to humans would be sufficient. Not a brute-force enumeration of tautologies in an axiomatic system, but something that could generate and prove ideas that a mathematician might.

I think o1-mini is close enough. It’s not doing research, but it’s solving undergrad-level problems, and it’s not like I’m publishing new asymptotic bounds in analytic number theory. Whatever separates me from the OpenAI transformer networks, it isn’t the ability to parse mathematical statements written in English and do a page worth of algebra or calculus to successfully solve a problem. Is it all “just” pattern-matching? I spent eight weeks of a third-year course in abstract algebra4 pattern-matching my way to full marks in the weekly assignments without any understanding of what I was doing, just guessing – consistently correctly – where all the symbols needed to go. I think that constitutes evidence of intelligence in me. And was my eventual understanding those algebraic structures not simply recognising patterns at a higher level?

Terry Tao gives o1 somewhat tougher tests than I do:

Here the results were better than previous models, but still slightly disappointing: the new model could work its way to a correct (and well-written) solution *if* provided a lot of hints and prodding, but did not generate the key conceptual ideas on its own, and did make some non-trivial mistakes. The experience seemed roughly on par with trying to advise a mediocre, but not completely incompetent, (static simulation of a) graduate student. However, this was an improvement over previous models, whose capability was closer to an actually incompetent (static simulation of a) graduate student. It may only take one or two further iterations of improved capability (and integration with other tools, such as computer algebra packages and proof assistants) until the level of "(static simulation of a) competent graduate student" is reached, at which point I could see this tool being of significant use in research level tasks.

Some things do separate me from the transformers, and not just my ability to count the number of r’s in ‘strawberry’ (solved by o1?) or that I didn’t need to ingest an Internet’s worth of text to learn what I know.5 There’s a game that some people play, which remains amusing, consisting of taking a famous riddle, tweaking the wording so that there is an obvious solution different from the classic one, and asking it to an LLM. ChatGPT-3.5 would almost always pattern-match to the classic version of the riddle and give the wrong answer; my impression from Twitter screenshots since that era is that GPT-4 class models improved on this front but are still imperfect, and o1 doesn’t seem to be a step up on trick questions the way it is for non-trick technical questions.

A lot of commenters talk down the LLM for such failings. “This is proof that it doesn’t reason,” they might say, as though that makes its ability to teach me second-year statistics any less impressive.

I think that we should also bear in mind the lesson of the Wason selection task, which I will quote here in case you haven’t seen it before. It is terrific fun:

You are shown a set of four cards placed on a table, each of which has a number on one side and a color on the other. The visible faces of the cards show 3, 8, blue and red. Which card(s) must you turn over in order to test that if a card shows an even number on one face, then its opposite face is blue?

A lot of humans get this wrong, and I certainly have to concentrate briefly to solve it. It’s a logic problem that human intuition usually stumbles over.

Humans do not stumble over the following equivalent logic problem:

Four people are drinking at a bar. The first is drinking Diet Coke, and you can’t tell their age. The second is drinking beer, and you can’t tell their age. The third is old, and you can’t see what they’re drinking. The fourth is a young teenager, and you can’t see what they’re drinking. Who do you have to check to test that if someone is drinking alcohol, then they are at least 18 years old?

Humans are not always good at logic (you don’t have to turn over the blue card, but you do have to turn over the red card). After making machines that have rigorously followed deterministic logic for decades, humanity has created a machine that has a much more human-like range of abilities, but also makes its own, new kind of logic errors.

Probably they’ll get fixed up in the next couple of generations, but it’s a remarkable and surprising state of affairs for now.

At what point will an AI be conscious?

If there’s a quale in the matrices, how would we know? Does it depend on the activation function? Our inability to understand the basis of consciousness and experience has so far been profound, and the existence of today’s AI tools has not helped us make any progress that I have seen.

My intellectual bias is very much that a correct explanation of consciousness should be information-theoretic6 – there’s nothing inherently special about the particular chemical substrates of our brains, it’s all about how the components interact. Opposed to that, I have some baser intuitions that seem to me like prejudices with differing levels of justification: that flipping bits in RAM or on a hard disk can’t make it conscious; that a gigantic, slow, neuron-perfect mechanical simulation of a brain would not experience anything; that being “always on” is an important distinguishing property of a human who has a sense of self, as opposed to a computer program that is run on demand.

I assume that dualists have a much easier time of things here in simply rejecting the consciousness of a machine, though I hope for completeness’ sake that there are some dualists who claim that the appropriate manipulation of bits in a data centre will bring forth a new entity in the mental realm.

Will I get automated?

It’s a conversation, hey? But I think that as long as humans can contribute something in the knowledge work of a business, then bosses will find a use for them. I can imagine a GPT-p5.2 fine-tuned (or whatever) on our codebase, which allows one human developer to do the work previously done by five humans. The company could continue chugging along after laying off 80% of the programmers, but our competitors might keep their teams as they are and just ship several times more features than we do. There’d eventually be diminishing returns on the quality of the software, but customers would still prefer to buy the better product.

There is a related question of whose productivity benefits the most from judicious use of AI. On the one hand, if you don’t know how to code, but you have little ideas of things that you would like to script, then ChatGPT is absolutely brilliant at getting you there. The language models currently have a ceiling on their ability, and gains for the top programmers would be more marginal, coming mostly from having boilerplate code written slightly faster. This is the argument that AI brings human abilities closer together, raising the bottom up towards the top.

On the other hand, I think of some of the maths questions that I ask ChatGPT, and people with less knowledge than me wouldn’t even know what to ask, or how to reach the formulation of a problem that ChatGPT can help solve. I feel like the advantage I have over my colleagues in terms of maths ability is further increased by the AI. There is probably something to this in some contexts.

But I think that that latter feeling is partly illusory. Everyone has some things that they know how to do, and some awareness of related material that they know of. You can ask the AI about the things you know of, and you benefit.

Anyway if they do replace me with a bot, because I don’t interface enough with the human world to provide any value-add, at least it will be an exciting time. I will ask the bot a lot of questions.

No conclusion

Currently available, at least to paying subscribers, are the o1-preview and o1-mini models. The latter is faster and trained specifically for maths and code.

Even the first ChatGPT model was good for bite-sized chunks of computer code, presumably because there is an enormous amount of code available in plain text on the Internet, and a lot less LaTeX for maths. Later on I’ll muse about intelligence and reasoning; these debates apply to GPT’s ability to take instructions in human language and turn them into working computer code just as much as they do to its ability to solve my calculus problems.

But a language model solving my calculus problems is new, and in the misguided spirit of treating new AI skills as astonishing and old AI skills as mundane, I will focus for a while on the maths. For a couple of years, I could say that although gradient descent could write better code than me (tweet from 2017!), in my core competency of solving undergrad-assignment-style maths problems, I was not challenged by language models. No longer!

Really though, we shouldn’t lose sight of the code generation being incredible.

Strictly speaking, I asked this question to o1-preview, rather than o1-mini as my link suggests; I didn’t know at the time that o1-mini was, despite the name, better at maths. Anyway o1-preview got it right in the same way as o1-mini does.

The original versions of these questions were all using my work account, which does not permit public link sharing. The prompts I’m using and linking to are close enough to the originals, and the content that I’m sharing here is sufficiently generic that I won’t worry about getting a talking-to.

MATH3103, Algebraic Methods of Mathematical Physics. The lecturer wanted to force us – mostly physics students – to reason from axioms. The closest he ever got to a real-world example was “Let G be a finite group”. Fully two thirds of the class dropped out before the census date; good times.

Does the evolution that led to our brain’s neuronal structure count as some sort of training though?

Not IIT.